The world is no longer waiting for virtual reality (VR) to mature. By 2025, VR and extended reality (XR) technologies have crossed a tipping point. What once felt like futuristic entertainment is becoming a working tool for industries training, collaboration, design, health, retail, education, and more. At the core of that shift is VR development the discipline of building immersive, interactive 3D environments that people can enter, explore, and use meaningfully.

In 2026, VR development stands as a mature craft. It no longer means “toy projects” or “experiments.” Instead, it’s a strategic capability that combines 3D design, real‑time rendering, physics, spatial interaction, audio, UX, optimization, sometimes AI and even cloud architecture. In this guide, we’ll walk you through:

- What VR development really means in 2026

- The core components of building VR/XR experiences

- A typical workflow from concept to deployment

- Why VR development matters beyond gaming

- What happened in 2025 — major trends, data, and shifts

- What 2026 looks like: emerging trends, opportunities, and challenges

- What leaders — creators, product people, enterprises — should consider now

If you’re evaluating immersive experiences for your product, company, or vision, this guide gives you a 360° view.

What Exactly Is VR Development?

At its simplest: VR development is the process of conceiving, designing, building, and delivering immersive 3D environments that users can enter using a head-mounted display (HMD) or XR wearables. These environments are more than static 3D models — they’re interactive, spatial, real-time, and often responsive. Users can walk around, pick up objects, manipulate them, collaborate with others, or simply observe.

But the magic lies in one word: **presence**. Presence is the psychological sensation that you’re “somewhere else” — a virtual factory floor, a remote collaboration studio, a realistic simulation room, or even a distant planet. To evoke presence, VR development must blend multiple disciplines: 3D modeling, lighting, spatial audio, physics, interaction design, performance optimization — all while thinking in real time.

Unlike typical 2D apps or even traditional 3D games, VR development requires special attention to human factors: spatial comfort, frame rate stability, latency, intuitive interactions, and sense of scale. In 2026, VR development often extends into XR (extended reality), blending virtual environments with real-world inputs (passthrough, mixed reality), AI-driven content, and cross-device compatibility.

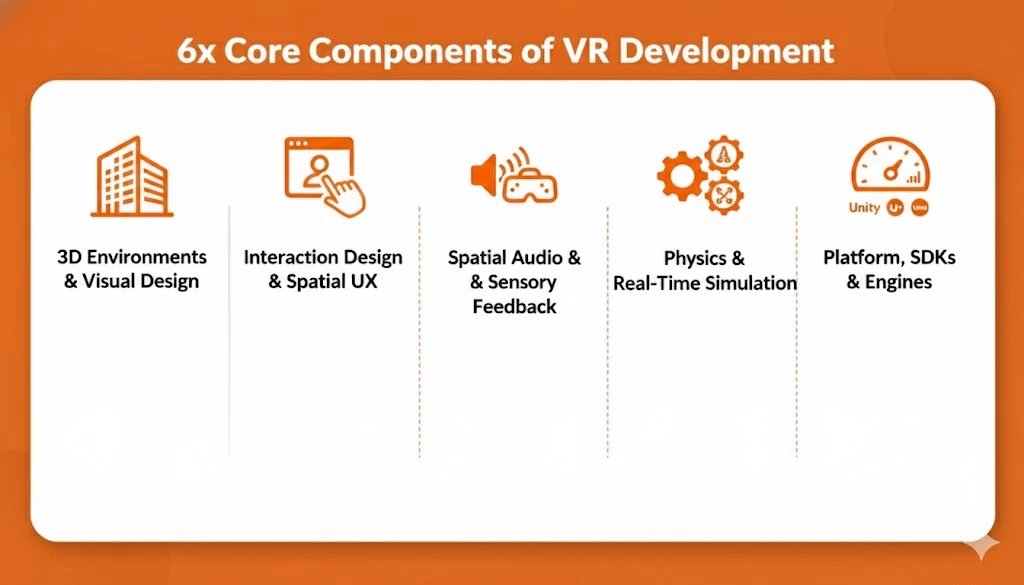

The Foundations: Core Components of VR Development

3D Environments & Visual Design

Everything in a VR experience starts with the environment and the 3D assets that populate it. Whether you’re building a realistic factory floor for training, a stylized virtual showroom, or a fantasy world for exploration — modeling, textures, materials, lighting, shadows, and environmental details set the foundation.

Crafting these environments typically relies on tools like Blender, Maya, Cinema 4D, Houdini, or other 3D content creation suites. Artists and designers build the geometry, apply textures and materials, define lighting and reflections, and think about how objects interact with each other. But in VR, it’s not just about beauty — it’s about **readability, performance, and comfort**. High-fidelity visuals lose their value if performance drops or users feel uncomfortable. Moreover, good environment design helps with cognitive mapping — users subconsciously understand space, scale, landmarks — which fosters immersion.

Interaction Design & Spatial UX

VR isn’t a passive medium. Users expect to do things — grab objects, move around, push buttons, interact with tools, teleport, walk, or even collaborate with others. That’s why interaction design and spatial UX are critical.

Interactions in VR come in various forms: hand tracking, controllers, gaze-based selection, ray-casting menus, physical object manipulation, teleportation or locomotion systems, physics-based object behavior, or even gesture-based commands. Designers must balance freedom, intuitiveness, and comfort. UI design in VR can’t just copy 2D interface patterns: UI panels might float, or exist as part of the environment. Spatial UX must account for scale, reach, sight-lines, occlusion, user orientation, and environmental constraints.

Audio & Sensory Feedback

Immersion isn’t just visual. **Spatial audio** — where sound originates from proper positions, reflects off surfaces, attenuates with distance, and dynamically changes as a user moves — makes the virtual world feel real. Most VR development incorporates 3D audio engines that simulate directional sound, occlusion, reverb, and distance-based attenuation. Combined with haptic feedback via controllers, and in some cases environmental effects, sensory feedback deepens immersion and supports interaction realism.

Physics & Real-Time Simulation

For VR experiences involving realistic interaction — especially simulations, training, or engineering — physics must behave as expected. Gravity, collisions, friction, object mass, motion — all influence how users perceive and operate in virtual space. Real-time physics adds complexity. You can’t pre-script every possible interaction; you must allow dynamic, user-driven manipulation. When done right, physics make objects behave convincingly — whether that’s dropping a tool on a tabletop, opening a cabinet, or simulating mechanical behavior on a factory floor.

Performance & Optimization

Of all underlying demands, **performance is the linchpin**. VR and XR expect high frame rates (commonly 72–120 FPS depending on hardware) and low latency. Drop below that — and presence shatters; users may feel discomfort or motion sickness.

To maintain performance, developers use techniques like: polygon count reduction, Level of Detail (LOD), mesh/texture optimization, occlusion culling, draw call batching, lightweight shaders, efficient memory management, and framerate budgets. For many VR developers, optimization isn’t an afterthought — it’s baked in from design phase.

Platform, SDKs & Engines

Under the hood, VR development relies on real-time 3D engines and SDKs that abstract hardware details, provide rendering pipelines, input support, interaction frameworks, and more. In 2026, common platforms include:

- **Unity** — widely used for both VR and XR, with a robust ecosystem of plugins and cross-platform support.

- **Unreal Engine** — chosen for high-fidelity visuals, realistic rendering, and cinematic-quality VR experiences.

- **WebXR / browser‑based VR/XR** — for lighter, more accessible experiences that run in the browser without requiring heavy downloads.

- Emerging open‑platform XR frameworks, especially as AR/MR glasses gain traction.

Developers often layer these engines with physics libraries, audio engines, input frameworks, and sometimes proprietary modules for networking, analytics, or AI integration.

The VR Development Workflow — From Idea to Immersion

Building a VR experience is not significantly different from traditional software projects — but the additional demands of spatial design, performance, and human comfort make certain stages more critical. Here’s how a typical VR development project unfolds:

1. Ideation and Conceptualization

Everything starts with a problem or opportunity. Why are you building VR? For training? For collaboration? For visualization? For therapy? Defining the goal helps shape the scope, interaction model, target audience, and success criteria (e.g. learning outcomes, engagement, ROI).

This phase involves sketching out user flows, spatial requirements (e.g. seated vs room-scale), interaction methods, and high-level wireframes. Questions to ask: Should the experience be experienced standing, seated, or walking? Will users need room-scale tracking? Do you need networked multiplayer?

2. Prototyping & Proof-of-Concept

Before investing heavily in 3D art and polish, teams often build quick prototypes using simple geometry (boxes, placeholders) to test interactions, movement comfort, scale, reach, and general feel. This phase is critical to discover issues early — motion sickness, awkward interactions, or UI placement problems. Prototyping helps validate whether the planned interaction model works, whether teleportation feels better than smooth locomotion for your audience, whether object grabbing feels natural, and whether audio cues make sense.

3. Content Creation & Development

Once prototypes are validated, the content phase begins. Artists build detailed models, texture them, define materials. Designers craft environments, audio designers create spatial soundscapes, developers code interaction logic, physics behaviors, animations, UI and HUD, and integrate analytics or backend if needed. During this phase, performance must be considered — content needs to balance fidelity with optimization.

4. Optimization & Performance Tuning

With content in place, the development team must optimize for target hardware. This includes reducing polycounts, culling unseen geometry, adjusting texture resolutions, optimizing shaders, limiting draw calls, and ensuring stable frame rates. Developers may create multiple quality levels (high/medium/low) for different devices (PC‑VR, standalone, XR glasses, etc.) — enabling wider reach.

5. Testing: Usability, Comfort & Stability

VR testing is more complex than typical QA. Beyond checking bugs and crashes, teams must evaluate **user comfort**. Do people feel nauseous? Are interactions intuitive? Do UI elements obstruct view or break immersion? Are movement mechanics causing disorientation? Testing should include users who are not familiar with VR, as well as experienced users, to surface different pain points. Accessibility, comfort, and onboarding matter — especially for non‑gaming audiences.

6. Deployment & Distribution

Once polished, optimized, and tested, the VR product is ready for deployment. For consumer-facing experiences, that may mean publishing on headset app stores (e.g. Oculus Store / Meta, SteamVR, or XR storefronts). For enterprise use cases, distribution might be via private packages, enterprise app platforms, or custom deployment pipelines. Post-launch, teams often monitor analytics (user behavior, retention, comfort reports), get feedback, fix bugs, and iterate on content or UX based on usage patterns.

Why VR Development Matters Beyond Gaming

When VR entered the mainstream, many assumed its destiny would be gaming — immersive worlds, video games, entertainment. And while gaming still plays a significant role, the real value of VR (and XR) in 2026 lies in utility, efficiency, and experience across many sectors.

Training & Simulation

VR shines in training and simulation. Instead of constructing expensive physical mockups (e.g., factories, labs, dangerous environments), organizations can build virtual replicas. Employees can train in safety, rehearse procedures, practice maintenance, respond to emergencies, or simulate rare events — all without risk, cost, or resource waste.

Sectors like healthcare, aviation, manufacturing, energy, automotive, and logistics are increasingly adopting VR-based training programs. VR allows repetition, scenario variation, instant feedback, and analytics — often providing better training outcomes than traditional classroom or physical simulations.

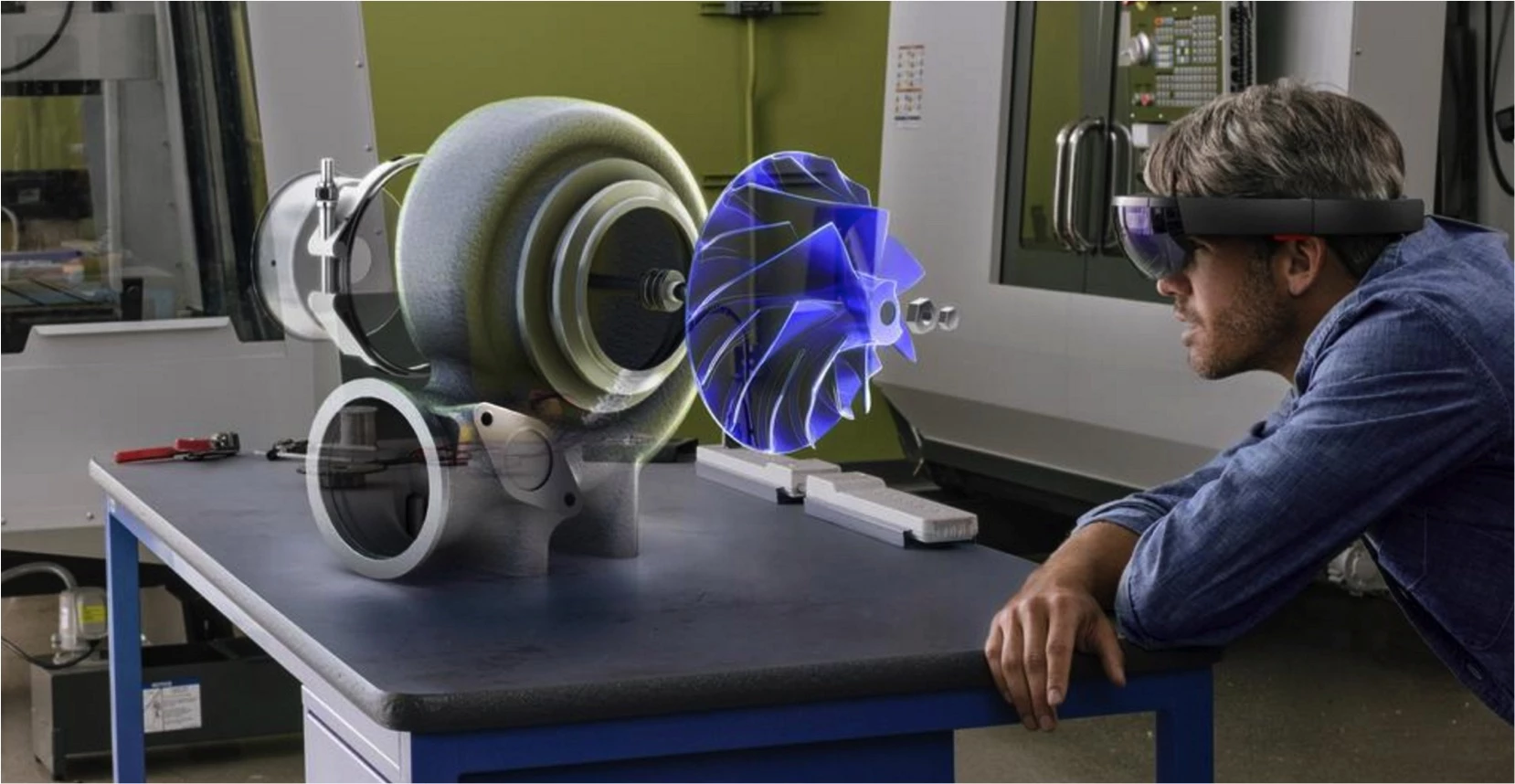

Remote Collaboration & Design

In a globalised world where teams are distributed across geographies, VR (or XR) offers a natural “shared space.” Designers, architects, engineers, and product teams can meet inside a virtual studio, inspect 3D models, make changes collaboratively, walk around a building prototype, or simulate a user journey.

This spatial collaboration is more intuitive than “screen share + zoom call,” because it supports depth, scale, and natural spatial relationships — more like being physically present together.

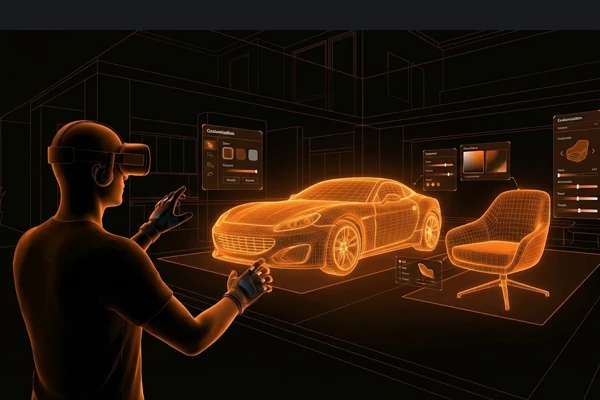

Product Visualization & Retail

For retail, real estate, automotive, or design-focused brands, immersive VR/XR experiences bring products to life. Users can visualize furniture in their living rooms, inspect cars inside and out, walk through houses, or test configurations in 3D. This leads to higher confidence, better conversions, and fewer returns.

Especially now, with hybrid consumer behavior, such immersive “try-before-you-buy” experiences bridge the gap between online convenience and offline tangibility.

Education & Therapy

VR offers novel possibilities in education and therapy. From immersive lessons (history, science, geography) to simulated labs, virtual field trips, or collaborative classrooms — VR removes physical constraints. In therapy and mental health, VR can simulate environments for exposure therapy, relaxation, social interaction, or rehabilitation.

For many uses, VR combines engagement, safety, repeatability, and emotional immersion in ways that traditional media can’t.

2025 in Review — What Changed & Why It Matters

The year 2025 turned out to be a watershed for the immersive ecosystem. Several market shifts, hardware innovations, and strategic realignments reshaped how development, adoption, and future of VR/XR are viewed.

Market Rebound & Shift Toward XR / Smart Glasses

According to a report from International Data Corporation (IDC), the global AR/VR headset market grew 18.1% year-over-year in Q1 2025. Moreover, while traditional VR headsets remain relevant, the real momentum came from optical see-through (OST) smart glasses and mixed reality (MR)/extended reality (XR) devices. Vendors such as XREAL and Viture saw dramatic growth — Viture posted a staggering 268.4% year-on-year growth. IDC analysts expect MR and XR devices — not pure VR — to drive the next wave of growth. This shift reflects mounting user preference for lighter, more wearable, socially acceptable devices that blend virtual content with real-world awareness — ideal for everyday use, enterprise adoption, and hybrid workflows.

High-End VR Hardware Faces Challenges; Smart Glasses Gain Ground

Despite a dominant player like Meta continuing to lead the VR market, 2024–2025 saw global VR headset shipments shrink. Industry data suggests a 12% decline in VR shipments in 2024. Some of these challenges stem from well-known VR adoption pain points: hardware cost, headset weight, battery life, heat, lack of compelling non-gaming content, limited upgrade incentive, and comfort issues. In response, companies are pivoting: lighter smart glasses, MR/XR platforms, and even “AI glasses” (smart glasses augmented with AI capabilities) are gaining traction — as hardware becomes more wearable and versatile.

Convergence of AI, Cloud & XR — New Opportunities for VR Development

2025 wasn’t just about hardware. Behind the scenes, VR is evolving with AI, cloud, and new interaction paradigms. Research and development showed early breakthroughs blending generative AI, real-time avatar reconstruction, and hybrid passthrough VR/MR experiences — blurring the line between real and virtual presence. As XR platforms mature, developers begin to envision immersive systems where content, visuals, audio, and even user avatars are dynamically generated, personalized, or adapted in real-time. This reduces friction in content creation, lowers cost, and increases flexibility — opening immersive tech to smaller teams and faster iteration cycles. Furthermore, cloud-based VR streaming (leveraging real-time rendering + adaptive streaming) started gaining viability, lowering the barrier for high-fidelity immersive experiences without high-end local hardware — a big shift for enterprises and global audiences.

Market Forecasts Point to Rapid Growth — But With New Form Factors

According to market forecasts in 2025, combined AR/VR and smart‑glasses shipments are expected to reach as high as 14.3 million units during the year — fueled by growth in “display-less” smart glasses and lightweight XR wearables. This suggests that while traditional VR may slow down, the broader immersive market (XR + smart glasses + MR) is accelerating — making 2026 a potentially pivotal year.

What Major Players Are Doing — Signals from Industry Leaders

Meta remains dominant in VR hardware — but their focus is shifting. Even as they hold ~50.8% market share in AR/VR headsets in 2025, Meta’s product roadmap increasingly emphasizes lighter, more wearable XR devices over bulky VR headsets. Meanwhile, vendors like XREAL and Viture specializing in optical‑see‑through smart glasses are growing rapidly. This indicates strong demand for lightweight devices with mixed reality capabilities. In parallel, AI-driven innovations such as generative environment creation, AI-enabled avatars, pass‑through VR compositing, and cloud rendering pipelines are becoming part of the core stack for next-gen immersive development. Taken together, these moves suggest immersive tech is shifting from niche to mainstream from “gaming toys” to “platforms for work, collaboration, training, and spatial computing.”

What to Expect in 2026 & Beyond: Trends, Opportunities and Key Considerations

Based on 2025’s developments and current technology roadmaps, here’s what VR developers, product leaders, and enterprises should expect over the next 12–24 months:

XR First — Mixed Reality & Smart Glasses Will Gain Ground

Expect XR (mixed reality, smart glasses, optical‑see-through devices) to grow faster than “pure” VR. As optics improve (waveguides, pancake lenses, lighter hardware), and as form factors become more wearable, people will adopt immersive devices for everyday tasks: remote collaboration, enterprise workflows, spatial productivity, design reviews, and more. Standalone VR headsets won’t disappear — but their dominance will erode gradually. Use cases requiring deep immersion (training sims, design visualization, high-fidelity gaming) will still rely on VR — but lighter XR devices will take a growing share.

AI-Powered Content, Dynamic Worlds & Adaptive Experiences

With AI-powered tools and generative workflows becoming more sophisticated, expect VR development to shift towards dynamic environments. Instead of manually building every asset or scene, developers may use generative AI to create environment variants, texture variations, or even narrative-driven interactions. User representation will evolve too: AI-driven avatars, real-time pass-through compositing, and adaptive UX (changing UI based on user behavior or preferences) will make immersive experiences more personal, scalable, and socially engaging. In short — immersive experiences will get smarter, more adaptive, and more affordable to build.

Cloud & Streaming-Based VR/XR Becomes Practical

As cloud rendering, adaptive streaming, foveated rendering, and remote compute technologies mature, high-fidelity VR/XR experiences will no longer require expensive local hardware. Instead, rendering can happen on powerful servers, streamed to lightweight headsets or glasses. For businesses, this opens up huge possibilities: deploy once, stream globally; remove hardware constraints; scale faster; and offer high-end immersive experiences without heavy upfront capital investment.

Enterprise Integration & Use-Case Expansion

Expect immersive tech to penetrate deeper into enterprise workflows: training, safety simulations, remote maintenance assistance, collaborative design, spatial data visualization, remote inspections, interactive onboarding, and more. As hardware gets lighter and usage becomes more common, enterprise adoption will rise — especially where safety, cost-savings, or collaboration efficiency are involved.

Rise of Standards, Privacy, and Ethics

With broader adoption come bigger responsibilities. As VR/XR and AI converge, issues around privacy, data collection, environment scanning, gaze tracking, biometric data, user behavior — will surface. In 2026 and beyond, developers and companies will need to adopt transparent data policies, user consent practices, privacy-by-design, and ethical guidelines to build trust. Furthermore, standards for interoperability, cross-platform compatibility, and accessibility will become important as the number of devices, form factors, and use cases multiply.

What This Means for Developers, Product Leaders & Businesses

If you’re thinking about leveraging VR or XR in 2026 — whether for enterprise, consumer, or hybrid products — here are strategic recommendations:

- Think XR-first, not VR-first. Evaluate use cases for mixed reality or smart glasses first. For many workflows (collaboration, design, training), lighter XR devices offer better usability, lower friction, and greater adoption potential.

- Design for modularity and scalability. Build content pipelines and architectures that can adapt as hardware evolves — e.g., support both standalone VR and cloud streaming, or allow fallbacks for XR glasses.

- Leverage AI & Cloud early. Use generative tools, AI-based avatars, adaptive UI, cloud streaming — especially if you aim for scalability, multi-device distribution, or dynamic experiences.

- Prioritize user comfort, privacy, and ethics. Ensure clear privacy policies, easy opt-in/opt-out flows, minimal intrusive data collection, and comfort-aware design. These are non-negotiable for enterprise deployment or consumer trust.

- Start with high-impact use cases. Choose domains where VR/XR delivers clear value (training safety, product visualization, remote collaboration, simulation). Use data (time saved, error reduction, engagement uplift) to justify investment.

- Invest in cross-disciplinary talent. A successful VR/XR project often needs 3D artists, UX/spatial designers, AI engineers, performance engineers, and product strategists. Build a team that reflects that mix.

Conclusion — VR Development in 2026: From Hype to Strategic Reality

In 2026, VR development is not a speculative hobby — it’s a strategic capability. The convergence of hardware evolution, market rebound, AI, cloud, and real-world needs has shifted immersive technology from “novelty experiment” to “business enabler.” VR/XR now offers real value: safer training, more intuitive collaboration, engaging education, immersive product experiences, and new forms of spatial communication. For those building in this space — whether as creators, entrepreneurs, or enterprise leaders knowing how to navigate VR development with awareness of its technical demands, human-centric design principles, market context, and emerging trends will be critical. If you choose to adopt immersive experiences thoughtfully with modular architecture, user-first design, performance discipline, and ethical considerations you stand to create products and experiences that don’t just look modern, but actually make a difference. 2026 could very well become the year immersive tech crosses into ubiquity.

estrelabet2

December 27, 2025 at 4:49 pmEstrelabet2, alright! Always on the lookout for new places to try my hand. Wish me luck, lads! estrelabet2

Damilare atunrase

January 19, 2026 at 2:53 amI really appreciate it was help pls reach out to me always

Pingback: Navigating the Future of VR Headsets: Innovations and Predictions for 2026

aaaleaobet

January 28, 2026 at 8:26 pmThinking about trying aaaleaobet. Seeing a lot of ads for it lately. Hope it’s not just another scam site. Might deposit a small amount to test the waters. Check it out if you are brave aaaleaobet

rummyjungleelogin

January 31, 2026 at 7:15 amTried rummyjungleelogin the other day, and the whole login process was super quick and simple. That’s what you want, right? Fast access to games. Have a look: rummyjungleelogin

dailyjiliapp

January 31, 2026 at 7:15 amAlright, checking out dailyjiliapp. Heard some good things, hoping it lives up to the hype! Fingers crossed for some wins. Get yours now at dailyjiliapp

hovardabetgiris

January 31, 2026 at 7:15 amYo, hovardabetgiris, here I come! Gonna see what this site has to offer. Place your bets at hovardabetgiris.